Reflecting on One Year at Bedrock: Transformative LLM re:Inventing

"The power of a machine learning model is not just in its size, but in how well it can generalize as it scales." – Geoffrey Hinton, recipient of the Nobel Prize in Physics (2024); A.M. Turing Award (2018).

re:Invent wrapped up, and this week marks the one-year milestone for me at Bedrock. As I reflect on the past year, it's a good moment to look back at both the highlights and the hurdles, the moments of happiness and those of frustration. Notably, Bedrock celebrated its first anniversary in October, a significant milestone that highlights the team’s rapid growth and impact. Having been involved in building and founding several products at Amazon, I feel fortunate to be part of Bedrock, a primitive service and cutting-edge product, and to grow alongside it. It’s been an exciting journey of growth, collaboration, and learning, and I’m grateful for the opportunity to contribute to such a transformative initiative. Challenging and fulfilling.

Anthropic Collaboration: Building Something Bigger Together

As one of the first two engineers when the project funded to build serverless inference for Anthropic models on Bedrock. One of the most significant aspects of my time at Bedrock has been working closely with our external partner, Anthropic, the leading frontier model provider company worldwide. From the start, the team I worked at under Bedrock inference has been the only team working directly with Anthropic and this unique partnership has involved a lot of collaboration work. I remember an interesting moment when a L7 colleague slack-messaged me saying, "Andy, good to meet you. I’ve heard your name mentioned even by [XXX] (the co-founder of Anthropic), when we were working on this ... project." It’s always humbling to hear that your name is being discussed at such high levels.

Our collaboration with Anthropic has been deep and ongoing. Anthropic is one of the companies I have collaborated with; operating with zero overhead and has a rare and appealing atmosphere of rising. This partnership led to some truly amazing product launches, such as the latest intelligent models, Computer Use, Haiku 3.5 Speed SKU on Trainium 2, and last week's "The Claude in Amazon Bedrock Course". I vividly recall several late nights where both teams worked tirelessly to debug issues, even staying up the entire night. Of course, the happy hours afterward at Seattle’s SLU for a successful launch were just as memorable.

Privilege, Confidentiality, and Exclusivity

Working so closely with Anthropic has come with a significant level of responsibility and confidentiality. My skip-level manager's teams report directly to VP in the reporting chain. Everything we do is considered privileged and confidential, can't be shared beyond my skip-level manager's teams, even within the same department, and we have secured codebases, documents, channels, basically everything related to our work. This sense of exclusivity and responsibility reminds me of my time in Alexa (Device org), where access to certain departments required specific clearance.

For nearly a year, our team’s work has been shrouded in secrecy. We’ve been unable to share much with others outside our direct teams, including other Bedrock teams. The good news is that people recognize the work we’ve done in delivering Claude models, and while the details are closely guarded, the results speak for themselves. Over time, we’ve become more accustomed to operating in this high-trust, high-privacy environment. And you'll find a dedicated pair of L10s (Distinguished Engineers), L8s (Senior Principals), and L7s (Principals) ICs participating in team operational meetings as well as partner and customer calls, executive titles like these are rarely seen in other product teams.

Claude on Trainium

Thanks to teams effort. This has been a significant milestone, and I feel privileged to have been deeply involved in several key aspects of this project over the past year. There were many unknowns and challenges along the way, but through careful setup, testing, and benchmarking, we worked closely with the Anthropic and Annapurna teams to achieve great results. The outcome turned out to be a big win, and it was exciting to see the announcement spread during re:Invent, especially after Peter’s "Monday Night Live" session. What’s interesting is a coincidence that occurred during this project: Because of my long-standing part-time contributions to the Linux Foundation community (not work relevant), I was invited to visit San Francisco as a member of the PyTorchCon Program Committee. While there, I participated in a panel discussion where James Bradbury, Head of Compute at Anthropic, was also a speaker. The following week, back in Seattle, I worked on tasks that happened to involve several interactions with James on the Claude on Trainium. It felt like a serendipitous moment when you know someone outside of work and later find yourselves working together full-time on the same project.

Milestones and Deliverables: A Year of Innovation

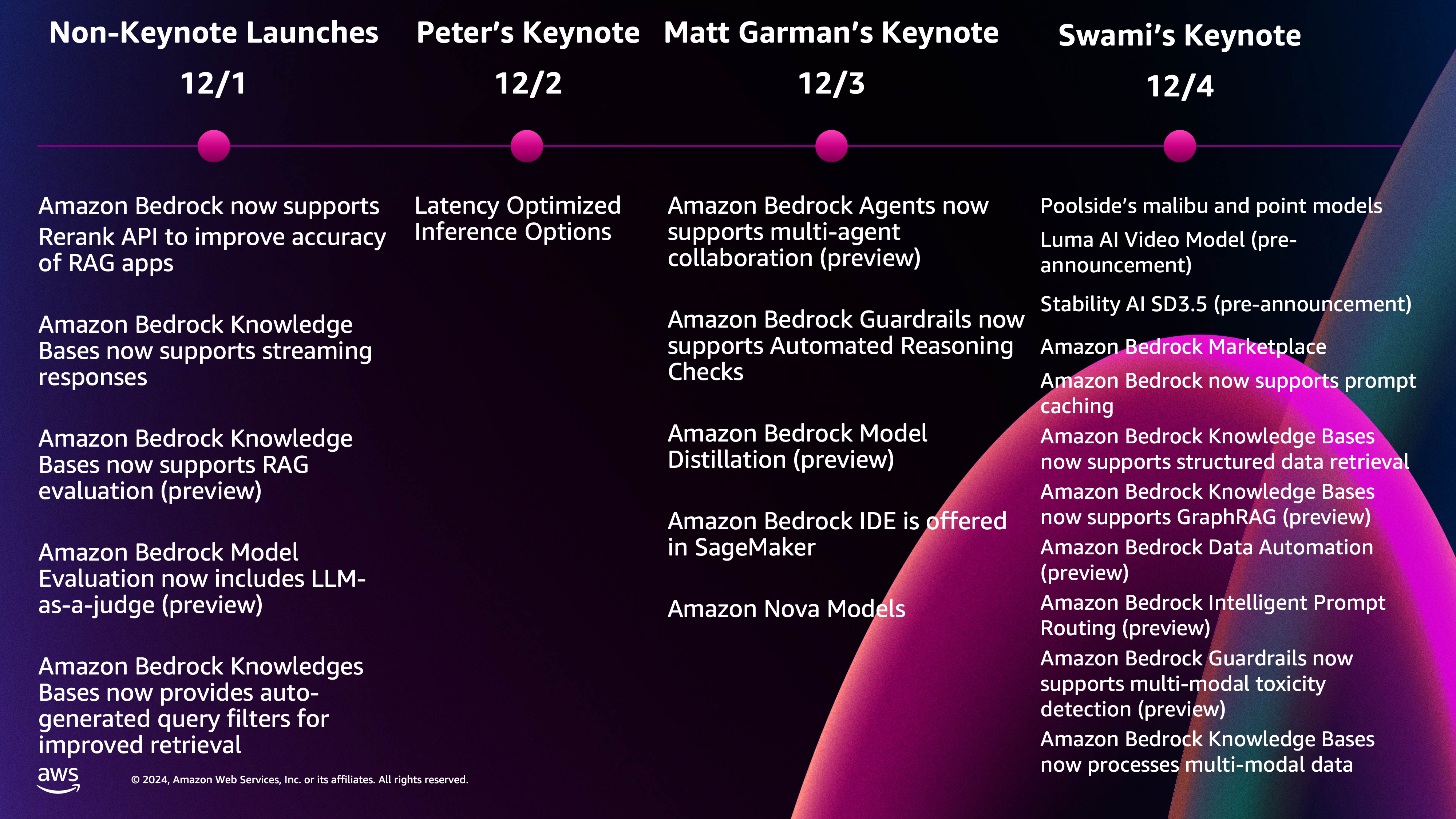

(re:Invent 2024 Amazon Bedrock launches)

I'm at Bedrock inference team, a team behind this year's most trending keywords, including 'Claude', 'Trainium' and 'Computer Use'. Looking at the work we’ve accomplished, the list of deliverables is nothing short of impressive. In the past year, we’ve launched several net new innovations and features that have pushed the boundaries of AI and model inference. Some of the key milestones include:

I’ve had the privilege of contributing to or leading these launches, and the pace of innovation here is intense. Each month brings one or two major launches, and this is just the external-facing work. Behind the scenes, there are countless internal deliverables that contribute to the overall momentum. While quarterly or yearly deliveries may have been the norm in the past, at Bedrock, it’s all about the monthly or bi-weekly cadence, with a constant push to meet what some may call "unreasonable" timelines. You can never know what is the character of the next model. The complexity and effort required to meet these fast-paced expectations should never be underestimated.

Move Fast

Bedrock and the broader GenAI department are known for their fast-paced environment. One thing I’ve observed as a new joiner is that people, rarely reply to Slack messages. Initially, this felt uncomfortable, and I wondered if it was a sign of something personal. However, as I settled in, I realized that it’s simply a matter of time. There's just not enough bandwidth to keep up with every message, even when you want to support your team.

In some ways, I've come to appreciate the return-to-office (RTO) setup, especially now that we have newly assigned desks in our Nitro North office building. With people in the office, it's easier to find colleagues and have quick, direct conversations. Junior team members can easily approach senior colleagues to ask questions and get help to overcome obstacles. This has improved our communication efficiency significantly. Another interesting observation is the prevalence of cold calls in our culture. It’s not unusual to receive calls at any time from your colleagues, skip-level managers, or even GMs. On the very first day I joined Bedrock, I was already hosting design reviews and being asked to jump into tasks. This unique style of working can be intense but also fosters a sense of urgency and immediate engagement.

An AI researcher shared his typical daily schedule at OpenAI in a tweet. It's interesting to see how others in the same field organize their day. Balancing work and life is always a challenge, especially in a high-pressure environment like Bedrock. But I find that the excitement of working on such a transformative product makes it all worthwhile. The product we’re building has the potential to shape industries and change the way we interact with technology, and that gives me a deep sense of fulfillment.

Team 3x

My manager's team 3x, with more people expected to join in the coming months. Bedrock is expanding rapidly, and with that growth comes the challenge of managing increasing complexity, new team members, and cross-country collaborations. As our team grows, balancing workloads and maintaining effective communication becomes a delicate art. Peer manager partnerships and collaboration are crucial, especially when coordinating across different time zones and cultural contexts.

Bedrock is actively expanding its team, and I’ve had the chance to conduct many interviews. At Amazon, I’ve participated in over 110 interviews, with 40 of them specifically for Bedrock, averaging about one interview per week over the year.

Building Primitive Services

Peter DeSantis gave a highly comprehensive session at re:Invent this year, where he provided an in-depth explanation of the complexities involved in machine learning workloads, covering both model training and inference. I won't dive into the specifics of prefill and token generation. For model inference, getting a model up and running is no easy task. Bedrock plays a heavy lifting role in facilitating collaboration between compute resources, enabling access to the most advanced and intelligent models for utilization. I’ve enjoyed working with the foundational-level building blocks like LLM (Large Language Models) inference. In 2023 letter to shareholders, Andy Jassy explained and shared the concept of "Primitive Services". My previous experience spans various AWS products such as S3, Containers, and Virtulization, and I’ve found that working on primitive services offers a unique sense of satisfaction. Someone says Bedrock is the "Lambda of LLM". I say it is more than that. Mart Garman stated in an interview with SiliconANGLE, "Inference is the next core building block. If you think about inference as part of every application, it becomes integral, just like databases." It’s exciting to see how the generative AI space is evolving, and I’m thrilled to be part of Bedrock at such a transformative moment in AI history.

What Comes Next

As we continue to grow, I foresee a future where AI models become even more deeply integrated into our daily lives. At NeurIPS, Ilya Sutskever, remarked that "Pre-training as we know will end." So, what comes next ? "Agents", "Synthetic data", and "Inference time compute ~O(1)". FM / LLM of today may increasingly resemble the everyday software applications we use, with future updates and iterations becoming as routine as downloading a new browser version from the App Store. In this highly competitive landscape, the version numbers of models from different providers could eventually resemble browser version numbers, reflecting regular updates and improvements. We also expect the boundary of knowledge within these models to shrink, making them accessible to a broader audience. Jason Clinton, CISO Anthropic, shared at re:Invent, more innovations are expected to emerge in AI agents. Anthropic published new research this week "Building effective agents". "2025 will be the year of agentic systems".

Anthropic has done a significant amount of impactful work here, particularly with recent Model Context Protocol (MCP) and its Computer Use (apologies for mentioning it repeatedly, but it's an incredibly powerful feature). One of the papers I read about its potential exploration “The Dawn of GUI Agent: A Preliminary Case Study with Claude 3.5 Computer Use” by Show Lab at National University of Singapore, is definitely worth checking out. These efforts have played a crucial role in initializing the entire ecosystem.

AI Safety

Responsible AI and AI safety are unavoidable topics, spanning both technology and sociology domains. Both Anthropic and Amazon have made serious investments in this area. It is intriguing to read the AI safety research findings done by Chatterbox Labs. Working closely with Anthropic has been particularly insightful, as their deep understanding and dedication to AI trust and safety are truly impressive. This concentration is evident in the interview with Anthropic’s CEO Dario Amodei on the Lex Fridman Podcast and their renowned article "Anthropic's Responsible Scaling Policy," which introduces the concept of "AI Safety Levels (ASL)." These efforts underscore their commitment to ensuring the safety of artificial intelligence.

Additionally, in a Financial Times interview, Dario highlighted two key priorities for the company in 2025, one of which is "mechanistic interpretability, looking inside the models to open the black box and understand what’s inside them.." He described this as "perhaps the most societally important".

I couldn’t agree more: interpretable machine learning and AI safety are complementary and mutually reinforcing. Adopting a cautious yet optimistic stance toward current advancements in AI is both wise and necessary, and I look forward to continued progress in this field.

Overall, my first year at Bedrock has been an incredible journey. I’ve learned so much, faced new challenges, and contributed to innovative products that are making a real impact. I’m excited to see what the future holds as we continue to grow, innovate, and push the boundaries of what’s possible in the world of AI and cloud computing.

A heartfelt thanks to Ben, Tim, Tommy, Evan, Nova, Jin, Frank, Josh, Peter, Justin, and all the Anthropic teams we collaborated with, and everyone at Bedrock, my managers, Ravi, Shashank, and all my amazing colleagues. It has been an absolute privilege to collaborate and work alongside this year.